Google Now

Android Design, Google, 2012-2013

Google started out as the most effective way for people to search millions of web pages. Over the years, Google Search has expanded to include places, images, videos, news, books and much more. The Google search box has also been extended, into a means to perform other tasks by typing; such as defining or translating words, performing calculations, converting currency values or finding quick answers from Google's knowledge graph.

Google Search has long been one of the most used digital interfaces on the planet, and the Android platform has rapidly increased its market share to become the world's most used mobile OS. By the end of 2012 it was installed on nearly 3 in every 4 smartphones worldwide.

The opportunities for the future of search on mobile devices are complex, exciting and far reaching. The Google Now project on Android brought together search, voice and a new predictive answers to take the next step in defining the user experience of Google's core service. It was central to the successful launch of Android 4.1 Jelly Bean and the Nexus 7 tablet. Building on this success, it has the potential to change radically what 'to Google' something will mean for people in the future.

Personalised, predictive answers

Google Now predictive cards give you answers before needing to search for them. Taking advantage of the growing potential, for mobile devices in particular, to display contextually relevant, personalised information at just the right time and place. For example, patterns of travel are identified so that alerts about disruptions in your daily commute can be displayed before heading off. As well as location and search history, other Google services such as Google+, Gmail and Calendar also provide a source of personalised information such as planned events, flights, package deliveries, and much more. This is cross referenced with live data from the web to provide status updates, just-in-time traffic and travel routes; and other timely information and actions. Further cards are based on an individual's interests and activities, such as movies and events nearby, an upcoming concert for a favourite band, useful information when traveling abroad, or live results for favourite sports teams.

My role

The overall design and engineering project involved collaborations within Android and across Google. I led an early conceptual phase of the design project, exploring the future of search on the Android platform with Visual Designer Andy Stewart. For the first release of Google Now, I led the design delivery of the search experience (of both web and on-device content) and animation designs for transitions between the core states of the app. For further releases I worked on the integration of different modes of search input; including Search with camera (Google Goggles), and Sound Search (Google Ears), as well as developments in the Now cards and the overall application UI framework.

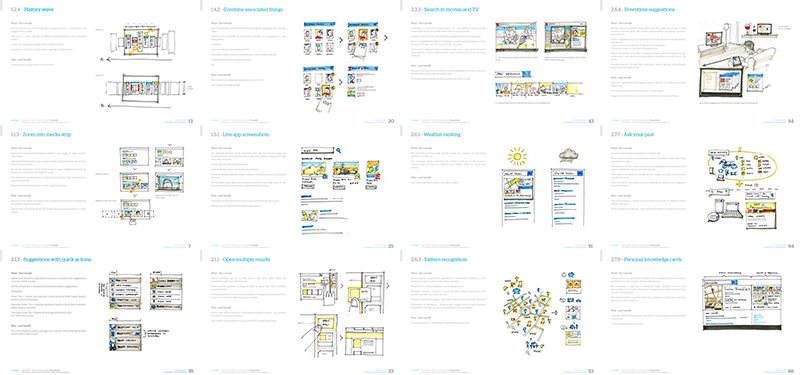

Samples from a catalog of design concepts for the near- and long-term future of search on Android.

Over 50 concepts were organised into: Touchable (Preview, Overview, Query Building, Refining Queries, Layout) and Frameworks (Actions, Apps, Entities, System UI, Vision & Voice, Suggest & Predict, Personal & Social). They have informed design developments in the Google Now app and further explorations of future search experiences on Android.

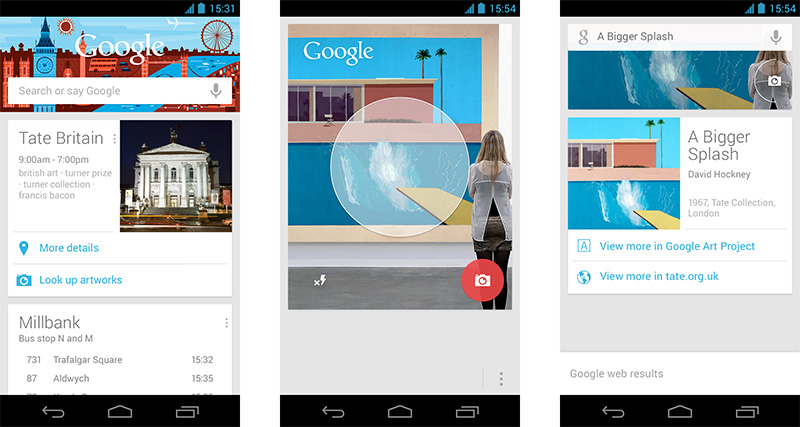

At Tate Britain in London, Google Now provides contextual information and actions for onward travel and about the gallery itself. This card surfaces the opportunity to look up artworks with the device camera during your visit.

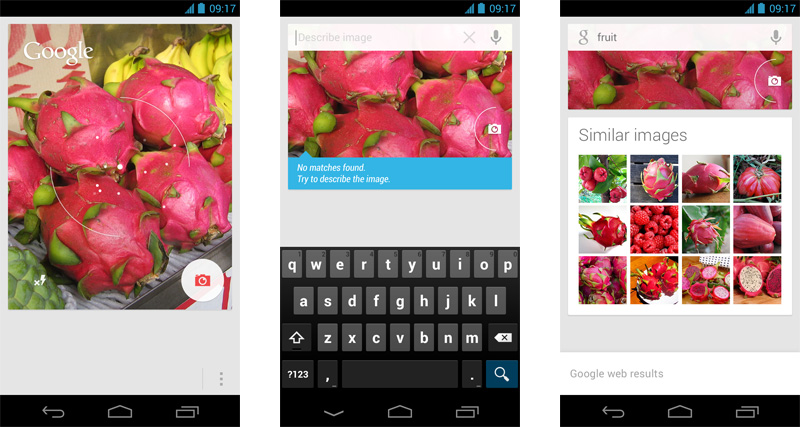

Search with camera, works well for specific kinds of searches for artworks, books, DVDs, film posters, barcodes and landmarks. Recognition of less geometrically distinct objects is more challenging. By asking people to add a short description to an image, closer and more useful visual matches can be found.

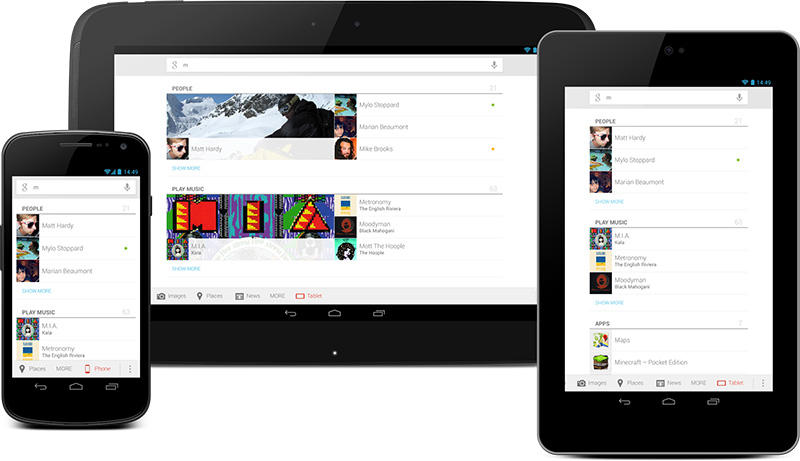

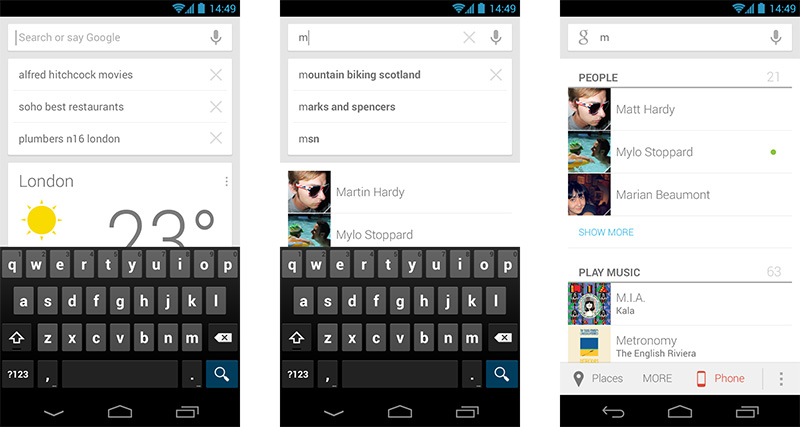

On-device search results on Galaxy Nexus, Nexus 10 and Nexus 7.

Sample search screens going from predictive cards to typing a search query and viewing results.

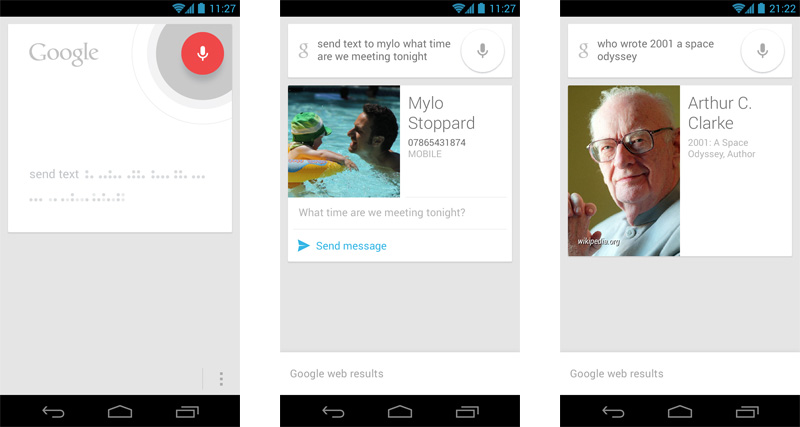

The project took major steps in natural language voice input as a means to get things done and find answers quickly. Combining the knowledge of Google with close integration into the synced service accounts and native apps on the Android framework. The voice interface was designed by Simon Tickner, Peter Hodgson and Andy Stewart.

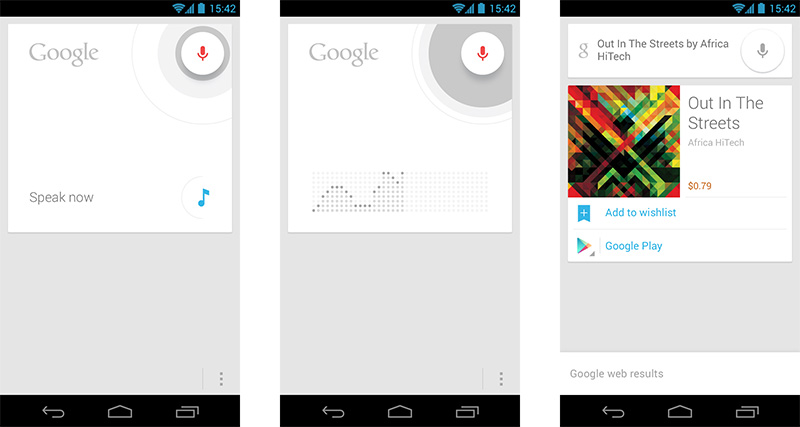

Another mode of searching, Sound Search detects the presence of music in ambient sound whenever the mic is open.